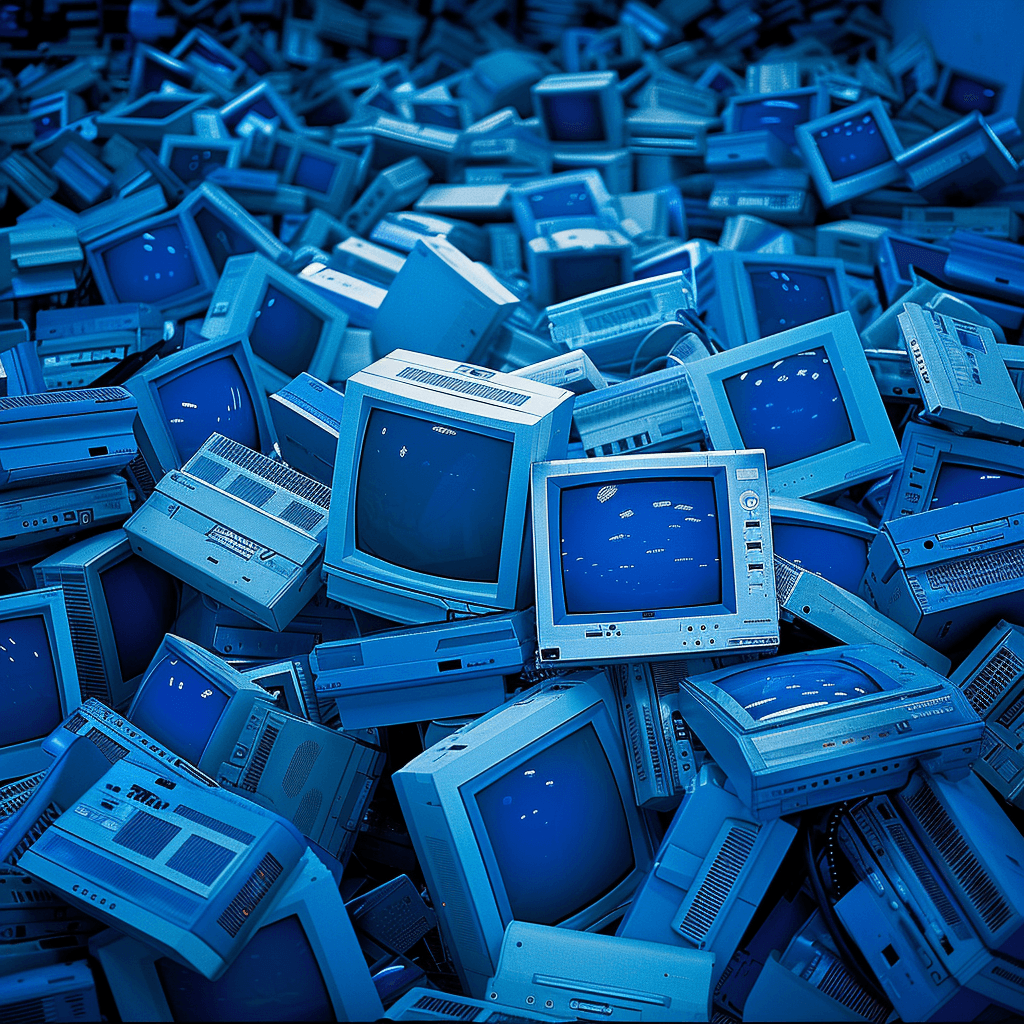

Software Monocultures

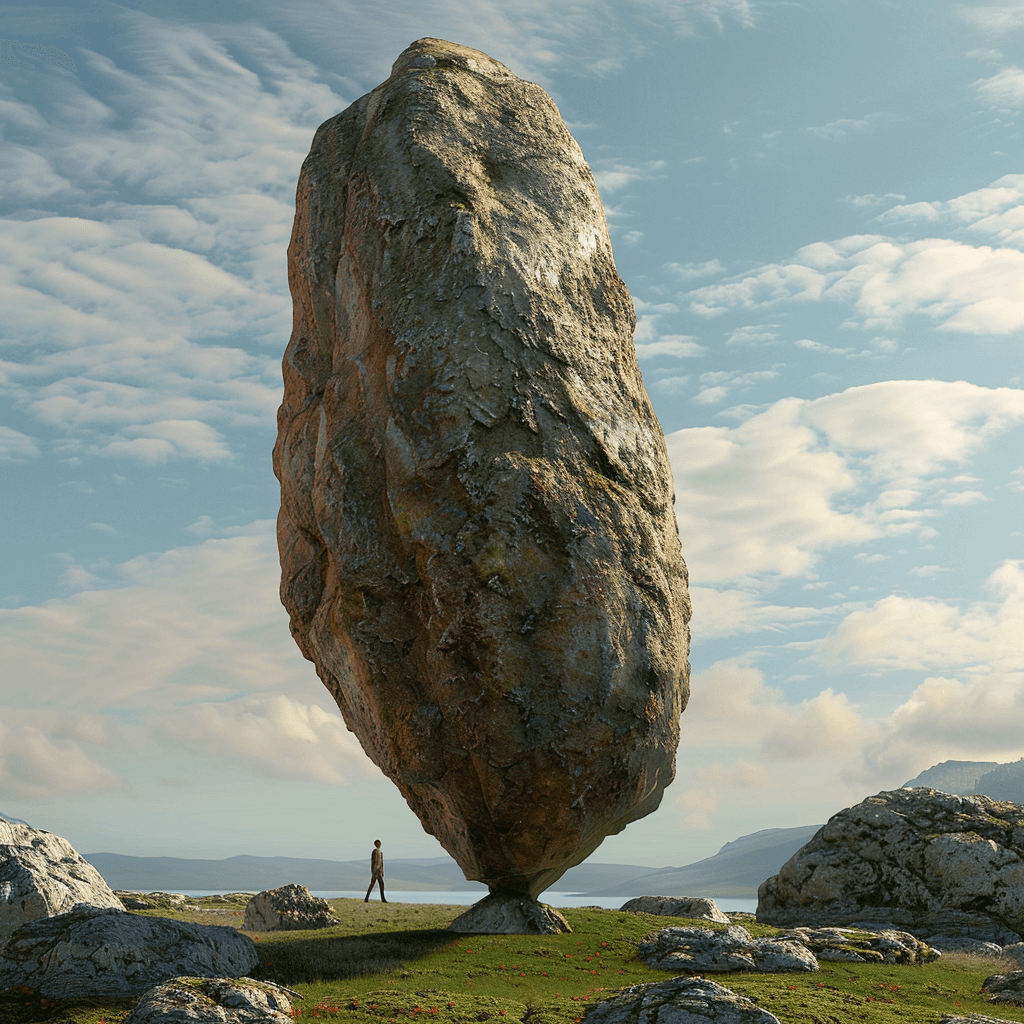

Egg Baskets and Concentration of Risk

It's been a few days since the Great Windows Crash of 2024, and I have a few thoughts about what happened, and things we should consider going forward.

For those who weren't aware, on Friday, July 19th, an automated update of widely used security program, Crowdstrike Falcon, caused Windows computers around the globe to crash / blue screen. The fix required physical access to millions of computers. Companies and organizations affected included airports around the world, a high percentage of Fortune 500 companies, hospitals and healthcare facilities around the world, and much, much more.

My tl;dr thoughts:

- Monocultures (software and hardware) are incredibly problematic.

- Fleet-wide automated updates are fraught.

- Security is hard, and full of tradeoffs.

- Remote management is essential, but sometimes you really need it at the hardware level.

- Software can be brittle.

The long explanation

-

The first thing that popped in my head on Friday was the phrase "software monoculture." This describes the situation where you have a large group of computers all running the same operating system or applications. (The same thing can happen in hardware where all CPUs from a particular company have the same vulnerability. Since there are only a few companies that make the world's most widely used CPUs, such a bug can easily impact millions of computers or devices.)

With a monoculture, when there's a severe bug, or a security exploit, you can end up with a scenario where all of the computers are impacted at the same time. The impact often plays out over days, weeks, or months. We've seen this many times over the years, and it has taken many forms. The heartbleed security bug a decade ago took years to mostly be resolved. However, we've never seen quite a vivid illustration of software monoculture until last week when millions of computers around the world effectively crashed all at once.

In this case, Mac and Linux computers running Crowdstrike Falcon did not have an issue. (Although that wasn't guaranteed unless Falcon works differently on those platforms.). And of course Windows computers not running Falcon didn't have any problems either.

In the case of Heartbleed, it was an issue with a widely used library that ran on multiple operating systems. So perhaps that was more widespread than a software monoculture. Another bad, widespread example were the Meltdown and Spectre exploits, which impacted widely used CPUs.

-

Automated updates can be problematic, especially if it involves a reboot at an inopportune time, or a crash. When it's fleet-wide, it can be disastrous. In this case, it was a crash. But it could be even more disastrous if a piece of malware got automatically distributed.

-

Security is hard and full of trade-offs. Products like Crowdstrike Falcon exist to solve a very real problem: security exploits or attacks that aren't known ahead of time, and that target servers and/or desktops. When you're facing a large, distributed attack, you need to be able to detect, and react to it. The need for such a product or operating system feature hasn't gone away.

-

I would guess most major companies are able to remotely manage their Windows desktops and servers. However, the most widespread products only work if the operating system is running. Servers often have remote management capabilities that don't require the operating system to be running, but desktops... not so much. Between point of sale-type devices, and remote work, this is a big issue.

-

Software can be brittle, and when it fails, it can fail spectacularly. A lot of people have focused rightly on the failings of CrowdStrike. It was clearly a bad update that should never have been pushed to millions of computers. And there has also been a lot of commentary on how Falcon ran inside the operating system. However, I think a case can be made that perhaps an operating system shouldn't fail like that when there's a driver issue. Drivers need elevated permissions, but with a lot of modern CPU features, perhaps there could be better isolation within the operating system and its components.

What do you think of all of this? Were you impacted? Were you part of the recovery effort? What lessons do you think we should have learned? And what do you think we (in technology) should do differently going forward?